It seems that almost every time we run one of our Intellegens webinars, variants on the same two questions come up in the Q&A. “How little data can I get away with?” or “how much data can it handle?”

The answers, of course, often lie in the detail of the machine learning study in question. We’ve been thinking hard about this detail as we developed our latest Alchemite™ 2023 Autumn Release.

So how would we summarise our current thinking on the size issue?

How little data?

It seems obvious that more data is always better. Your machine learning model is likely to be more accurate if you give it more training data. But it isn’t as simple as that. You have to start from somewhere, and that always means starting with the data you have. And acquiring some types of data can be very expensive. In a recent webinar, for example, CPI described the immense expense of manufacturing a single sample of a biopharmaceutical. More data isn’t always the answer. The right cost/benefit balance is often to generate enough data to give you a useful model, as long as this is coupled to a strong understanding of the uncertainty in the model’s predictions.

There isn’t a general answer to “how much is enough?”, but a good rule-of-thumb is to start from a dataset with more rows than columns (i.e., more measurements than descriptors and outputs), although the ability of Alchemite™ to handle sparse data means that it can make predictions with fewer rows. It is in these situations that accurate uncertainty quantification is critical, so that you can make decisions based on the known reliability of a given prediction. Uncertainty quantification is a real strength of Alchemite™.

But what if you have less data than this? Or none at all? Now you are in the realm of experimental design. Alchemite™ can help here, too. Its ‘Initial experiments‘ functionality allows you to input information about your experimental objectives and then recommends what experiments to do first.

The important thing is to start using machine learning early. Generate some initial data, use that to train an ML model, get recommendations from the ML on what to test next, then iterate until the model predictions are close to the target and uncertainty is within acceptable bounds. This should result in 50-80% fewer experiments than if you used conventional design of experiments methods and then analysed the results with ML.

How much data?

‘Big data’, like beauty, is very much in the eye of the beholder. In some domains, it refers to terabytes of data (often wholly or partly unstructured). Alchemite™ can be part of strategies to tackle such data, most likely by calling on its computationally-efficient algorithm through its API, alongside other tools to collate, organise, and process the data. Our scientific team is always fascinated to get involved in such projects.

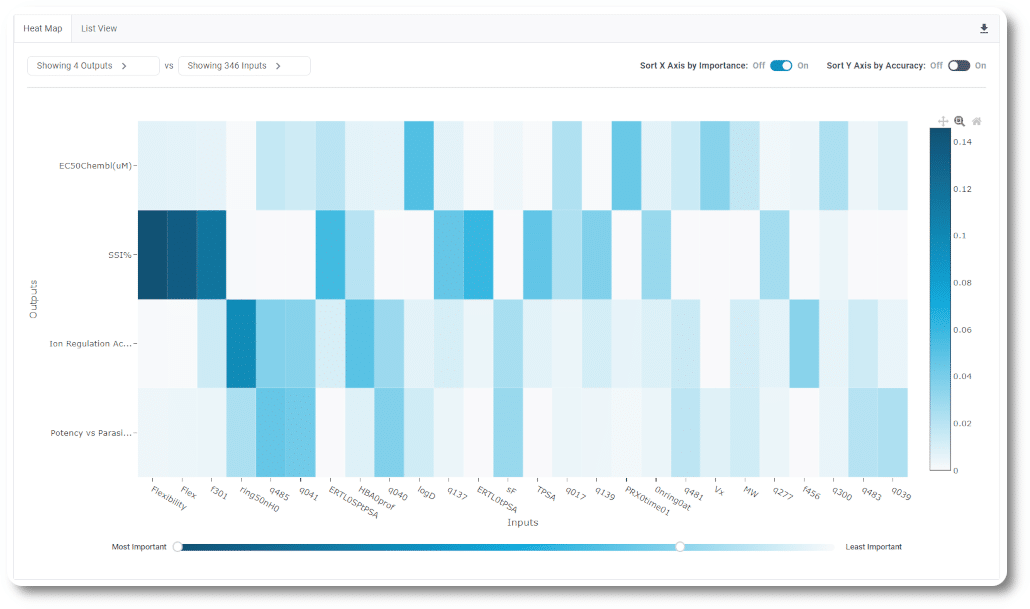

Using Alchemite™ as an everyday research tool through its web browser interface usually involves datasets that aren’t in this ‘big data’ league and can be handled in a spreadsheet. But even these can be large. While formulation datasets often have tens of rows and columns, it’s not unusual to find projects where thousands of rows represent different samples and hundreds of columns represent possible ingredients, processing options, or measured properties. Alchemite™ has been applied in life science applications with hundreds of thousands of rows of data. Large-scale datasets need not only a speedy algorithm, but the right tools to ease data manipulation and analysis – for example, being able to group columns of data easily or sort and filter analytics such as the importance chart (pictured above). Such tools make real-world large experimental and process datasets routine fare for Alchemite™, which has the added advantage over most ML methods that it can build useful models even when this data is sparse and noisy.

The time is now!

Underlying those “how much data” webinar questions is another question: “When should I start with ML, or is it already too late?”. The answer to the first part of that question is always “Now!” if ML is viewed as a tool that enhances and supports experimental expertise and effort. And its never too late to figure out strategies to apply ML to large existing datasets, given its ability to find patterns that might otherwise be missed. Now is a good time for another reason, since the recent Alchemite™ release includes enhanced uncertainty quantification, an improved ‘initial experiments’ tool, algorithmic speedups, and new analytics and display features for large datasets. All of this supports the conclusion we’ve come to in looking at datasets of all sizes across many R&D projects. The wise approach is just to give it a go!